Testing in the age of AI agents

At Coding Delta, our mission extends beyond helping our clients grow—we also develop and market our own products. One such product is Chatsome, an AI chatbot that integrates seamlessly with our clients' CRM systems.

Transforming Customer Interaction

With our CRM integration, Chatsome becomes an integral part of the company's workforce. The chatbot is equipped with comprehensive knowledge of all products being sold, acting as a digital sales agent available 24/7 to assist visitors.

Moreover, by having access to order information, the chatbot also functions as a digital customer service agent, resolving numerous inquiries without involving human customer service representatives.

How Do Our Agents Work?

Website visitors can pose various questions, ranging from sales inquiries to customer service issues. We have developed an extensive pipeline to determine user intent and the appropriate response.

Given the diversity of our clients' products — some selling door handles, others baby accessories — we built a generic workflow adaptable to any scenario. Despite the differences in products, our system effectively manages them all.

However, the wide range of products poses a challenge: testing whether code changes improve or worsen the bot's performance for specific clients. Initially, with only a few clients, manual testing was feasible. As we scaled up, handling more edge cases, this became impractical.

Additionally, ChatGPT's responses vary each time it is asked the same question, complicating consistency. For example, when asked "Who is the king of the Netherlands?" twice, ChatGPT provided these responses:

Answer 1: The current king of the Netherlands is King Willem-Alexander. He has been the monarch since April 30, 2013, following the abdication of his mother, Queen Beatrix.

Answer 2: The king of the Netherlands is King Willem-Alexander. He has been the reigning monarch since April 30, 2013, following the abdication of his mother, Queen Beatrix. King Willem-Alexander is the first male monarch of the Netherlands in over a century, succeeding a line of three female monarchs.

Although similar, these answers differ enough to be problematic for computer interpretation.

Ensuring Consistent Results

We needed a solution to improve this. Traditional testing methods fall short because, unlike a simple function that consistently returns the same output for given inputs, GPT's responses can vary.

Introducing Our Testing Suite

To address this, we developed an in-house testing suite. For every critical bot interaction, we create a test specifying the chat history and expected output. We then:

- Ask GPT to generate a response based on the provided chat history.

- Start a new GPT session and compare the response with the expected output.

- Evaluate the result as either "true" or "false."

This approach allows us to verify changes in code. If any test fails, we investigate and correct the issue until all tests pass.

Why LLM tests are needed?

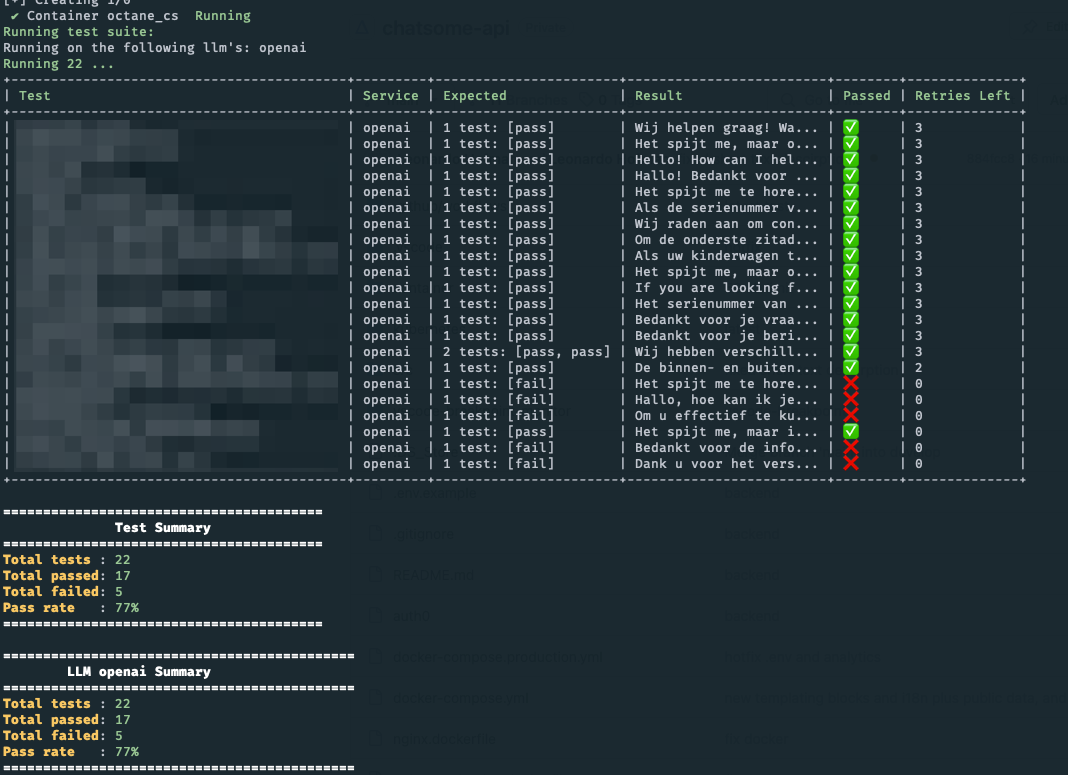

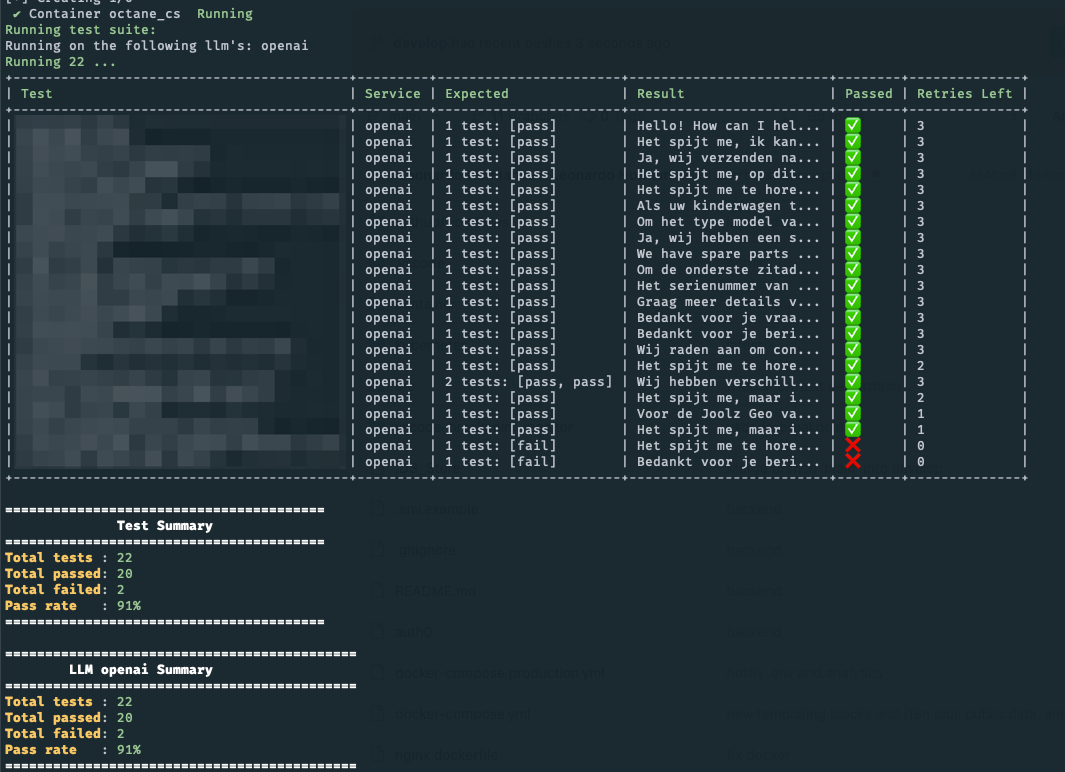

We already explained that the same sentence can result in wildly different outcomes. Below, we have test results where we changed only 1 prompt.

As you can see in the image below, a change of 1 sentence leads to 3 additional tests passing.

This shows the importance of having automated tests available when working with LLMs. Otherwise, you will never know whether new changes to the code change are improving the final outputs.

The Impact of LLM Testing

As shown above, the results have been remarkable. Our development velocity has significantly increased, and we are more confident in the accuracy of our AI agent's responses to our clients' visitors.

If you are interested in utilizing our AI agents, please get in touch. Additionally, if you want to learn more about our testing suite, feel free to contact us.